Xcopy File Path Limits

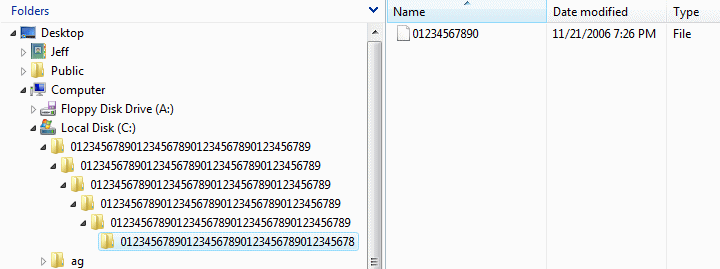

We annually have to migrate a large number of files (typically around half a million at a time) between servers. Every year I find the task painstaking, not for a lack of knowledge of Command Prompt and PowerShell, but because of the issues we always come up against. These fall into three main groups, as follows:. Non-enforecement of the 260 character file path limit by Windows at write. This is a well known flaw in Windows. An app can (under certain circumstances) write files with paths longer than 260 characters, but as this is invalid, these files cannot be accessed or processed without first using the 8.3 filename method and individually renaming folders and files to bring the path down to less that 260 chars.

May 30, 2016 - The 260-character path length limit in Windows can be removed with the help of a new policy, thus allowing you to run operations with files regardless of their path or file name. While this new rule is not enabled by default, admins can turn it on by following these instructions. Launch the Registry Editor. Enable NTFS long paths in Windows Server 2016 by Group Policy. Microsoft writes about the Maximum Path Length Limitation on MSDN, and they write: Maximum Path Length Limitation In the Windows API (with some exceptions discussed in the following paragraphs), the maximum length for a path is MAXPATH, which is defined as 260 characters. Enabling NTFS long paths will allow manifested win32 applications and Windows Store applications to access paths beyond the normal 260 char limit per node. Enabling this setting will cause the long paths to be accessible within the process.

Non-enforcement of filename validation by Windows at write. This one, like the first, is an old Windows design flaw that's probably been around for as long as NT. Also like the first, it's down to Windows not performing basic validation when a file is written, only this time the problem is files being written with invalid filename characters in their names. Randomly dropped files during copy. When I say random, I mean it. The copy process is running under domain admin and, where applicable, elevated privileges.

Copying a folder with thousands of files, each of which the SysAdmin has full permission to, and a random scattering of files and folders can be missed. Nothing marks these items out as unusual. A second attempt to copy can be fully successful or miss a different set of random files. Anyway, I think this problem relates to copying files between 2003 and 2008R2 only, though I'm not sure.

I generally use the Command Prompt XCopy method, sometimes with ForFiles for batch file processing. A typical command might be XCOPY D: StaffHomeDirectories. z: StaffHomeDirectories /E /C /H /O - you know, the usual thing. Anyway, I've more-or-less given up on using Windows tools for doing this as I always run into the same kind of problems.

Does anyone use any good 3rd party tools for doing this kind of thing, which solve the above problems? Anything you would recommend from experience? Note that using our backup system is not desireable as, although it's a reliable method, it's also painfully slow. We are running Windows Server 2003 SP2 Standard x86 and Windows Server 2008 R2 SP1 Standard x64. I agree with these concerns. Some programs could write files without using the full path so it could create files with long path which cannot be accessed.

For example we could map a folder and create a '260 character file', which will not able to be accessed when using the full path. For your current situation, I'm thinking if it could be workaround by using robocpy with '/R:' parameter, so it will not (or spend less time) on trying to recopy failed file. Output the result so that you can find the failed files later with manually correct these incorrect files. TechNet Subscriber Support in forum If you have any feedback on our support, please contact tnmff@microsoft.com. I agree with these concerns. Some programs could write files without using the full path so it could create files with long path which cannot be accessed.

For example we could map a folder and create a '260 character file', which will not able to be accessed when using the full path. For your current situation, I'm thinking if it could be workaround by using robocpy with '/R:' parameter, so it will not (or spend less time) on trying to recopy failed file. Output the result so that you can find the failed files later with manually correct these incorrect files. TechNet Subscriber Support in forum If you have any feedback on our support, please contact tnmff@microsoft.com. Thanks Shaon.

I have installed RKTools on our x64 2003 server. Although officially RKTools isn't supported on x64 2003, I've heard the tools still work, though I have yet to try RoboCopy. As for the 2008 R2 box, it seems RoboCopy is already built-in.

It looks promising - the help suggests that it supports 260 char paths, logging and resuming copy on connection interruption. I've heard of this tool before, but as it wasn't built in to 2003 I thought it was 3rd party. Will give it a go today and let you know if there's anything to report. Thanks again:-) - huddie. I would recommend using robocopy over xcopy, its so much better, faster, you can log and have it retry so if you do drop connection it will retry, the logging also helps identify the files not copied and multiple threads is a huge improvement over a single threaded copy.

The 260 char paths can be avoided if you were to mount the destination and source to a device/ drive letter so you refer to as G: so you will save characters that way. The user should nto be able to create folders and files with a depth of greater than 260 or i think windows will go a little crazy.

Ideally you should look at upgrading your backup system or atleast speeding it up, if its really slow i hate to think of how long you would need to restore data in the event of system failure or corruption. I would recommend using robocopy over xcopy, its so much better, faster, you can log and have it retry so if you do drop connection it will retry, the logging also helps identify the files not copied and multiple threads is a huge improvement over a single threaded copy. The 260 char paths can be avoided if you were to mount the destination and source to a device/ drive letter so you refer to as G: so you will save characters that way. The user should nto be able to create folders and files with a depth of greater than 260 or i think windows will go a little crazy. Ideally you should look at upgrading your backup system or atleast speeding it up, if its really slow i hate to think of how long you would need to restore data in the event of system failure or corruption. There are a number of reasons I prefer not to use backup apps for this.

It is not unusual for them to be slower than direct copy and if they aren't then this is surely an indication that there's some kind of bottleneck slowing the direct copy. Another is that batch processing of files during the copy process, such as you would do when using forfiles in conjunction with robocopy or xcopy is not possible, at least not with our backup software as far as I can see.

That would be robocopy.exe (pre-installed with W2k8, part of the ResKit in W2k3). Try the /COPYALL option, and the /MIR option if you want to do several separate runs and delete files in the target that have been deleted in the source. Note that robocopy.by.default. only copy files that don't exist in the target yet, so you can just run it several times and it will only copy the differences after the initial run. Windows Server 2003 Resource Kit Tools Some other hints: - /mir already includes /e or /s - /nfl and /ndl will suppress the file and folder listing of.successfully. copied files/folders; errors will still be logged.

Having log entries for files that were successfully copied usually are of no interest and only clutter up the log - /r and /w in a LAN are usually unnecessary; if a copy doesn't work, it's mostly 'access denied', either because the file is in use or because someone thought he's so very smart that he doesn't need his files backed up, denying admin access. Retries won't change that and will only slow down the copy.

/np disables the progress indicator. Very nice thing if you have the time to stare at the screen, willing the percentage to move, and if you copy files that are so large that a progress indicator actually makes sense. If writing to a log, it's totally counterproductive, because it fills the log with control characters. /z, /b, or /zb will slow down the copy because of the additional overhead, with not much benefit in a LAN. /z is useful if copying over WAN connections, and /b only if the account you're using doesn't have full control over the folder tree. You might want to pre-create the target 'Main' folder and set the same permissions as the source folder; in my experience, robocopy sometimes fails to set the permissions correctly when it has to create the target folder. Everything below should be processed correctly.

You can map your way up the directory tree using Subst or Net Use to allow you to avoid the length limit, I have successfully used that in combination with Robocopy to get very long paths and file names. But then newer versions of Robocopy ARE supposed to handle paths and files names longer than 255 characters as they support unicode now.

Xcopy File Path Limits 2016

An Example of what I mean, the script is untested but should work, might need minor tweaking::: Script Name: CopyTree.bat:Begin-Script @ECHO OFF FOR /F%%D IN ('DIR '%1%2' /A:D') DO ( SUBST X: /D /Y NET USE:Y /D /Y SUBST X: '%1%2%%D' NET USE Y: ' Destination-Server%2 ' MD 'Y:%%D' NET USE:Y /D /Y NET USE Y: ' Destination-Server%2%%D ' ROBOCOPY 'X:' 'Y:'. /ZB /R:2 /W:2 SUBST X: /D /Y NET USE:Y /D /Y CALL:Begin-Script '%1' '%2%%D' GOTO:EOF:End-Script GOTO EOF: Select all. You can map your way up the directory tree using Subst or Net Use to allow you to avoid the length limit, I have successfully used that in combination with Robocopy to get very long paths and file names. But then newer versions of Robocopy ARE supposed to handle paths and files names longer than 255 characters as they support unicode now. An Example of what I mean, the script is untested but should work, might need minor tweaking: (Note this script is untested, and I already noticed one minor typo which I am fixing in this re-post):: Script Name: CopyTree.bat:Begin-Script @ECHO OFF FOR /F%%D IN ('DIR '%1%2' /A:D') DO ( SUBST X: /D /Y NET USE Y: /D /Y SUBST X: '%1%2%%D' NET USE Y: ' Destination-Server%2 ' MD 'Y:%%D' NET USE Y: /D /Y NET USE Y: ' Destination-Server%2%%D ' ROBOCOPY 'X:' 'Y:'. /ZB /R:2 /W:2 SUBST X: /D /Y NET USE Y: /D /Y CALL:Begin-Script '%1' '%2%%D' GOTO:EOF:End-Script GOTO EOF. You can map your way up the directory tree using Subst or Net Use to allow you to avoid the length limit, I have successfully used that in combination with Robocopy to get very long paths and file names.

File Path References

But then newer versions of Robocopy ARE supposed to handle paths and files names longer than 255 characters as they support unicode now. An Example of what I mean, the script is untested but should work, might need minor tweaking: (Note this script is untested, and I already noticed one minor typo which I am fixing in this re-post) One final typo I noticed and wrapping the Script in code braces again, I am going to bed, night! -Q:: Script Name: CopyTree.bat:Begin-Script @ECHO OFF FOR /F%%D IN ('DIR '%1%2' /A:D') DO ( SUBST X: /D /Y NET USE Y: /D /Y SUBST X: '%1%2%%D' NET USE Y: ' Destination-Server%2 ' MD 'Y:%%D' NET USE Y: /D /Y NET USE Y: ' Destination-Server%2%%D ' ROBOCOPY 'X:' 'Y:'. /ZB /R:2 /W:2 SUBST X: /D /Y NET USE Y: /D /Y CALL:Begin-Script '%1' '%2%%D' GOTO:EOF:End-Script GOTO:EOF Select all.